Abstract

Dedicated to ZFS administration.

Zpool Administration

Basic Commands

zpool list

zpool list <name>

zpool list -v zroot

zpool status -x

Creating Pools and VDEVs

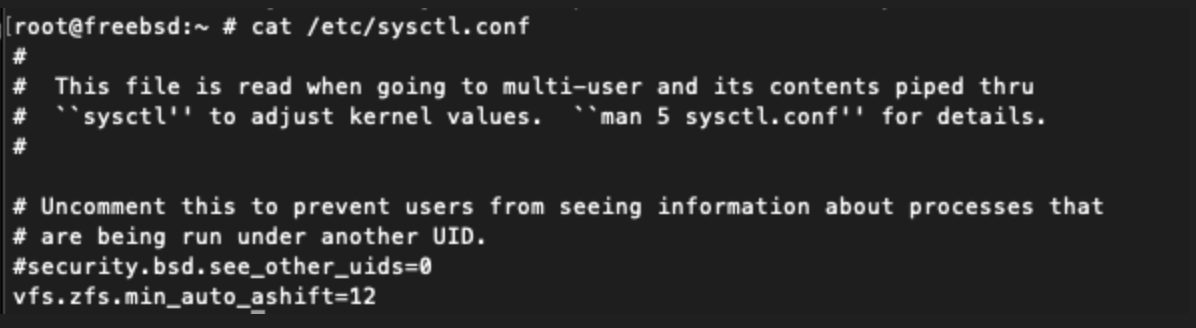

- Make sure ashift is 4k,

vfs.zfs.min_auto_ashift=12

- Select disks (da1, da2, da3)

ls -al /dev/ | grep da

crw-r----- 1 root operator 0x5a Nov 14 02:51 da0

crw-r----- 1 root operator 0x5b Nov 14 02:51 da0p1

crw-r----- 1 root operator 0x5c Nov 14 02:51 da0p2

crw-r----- 1 root operator 0x5d Nov 14 02:51 da0p3

crw-r----- 1 root operator 0x6a Nov 15 18:46 da1

crw-r----- 1 root operator 0x6d Nov 15 18:46 da2

crw-r----- 1 root operator 0x70 Nov 15 18:46 da3

- We can create a disk, provide it 1gb of swap, and label it.

- The labels should correspond to device serial numbers and location in production so it’s easy to swap out.

GB swap partition and a large ZFS partition, created with gpart(8).

gpart create -s gpt da1

gpart add -a 1m -s1g -l sw1 -t freebsd-swap da1

gpart add -a 1m -l zfs1 -t freebsd-zfs da1

gpart create -s gpt da2

gpart add -a 1m -s1g -l sw2 -t freebsd-swap da2

gpart add -a 1m -l zfs2 -t freebsd-zfs da2

gpart create -s gpt da3

gpart add -a 1m -s1g -l sw3 -t freebsd-swap da3

gpart add -a 1m -l zfs3 -t freebsd-zfs da3

gpart create -s gpt da4

gpart add -a 1m -s1g -l sw4 -t freebsd-swap da4

gpart add -a 1m -l zfs4 -t freebsd-zfs da4

gpart create -s gpt da5

gpart add -a 1m -s1g -l sw5 -t freebsd-swap da5

gpart add -a 1m -l zfs5 -t freebsd-zfs da5

gpart show -l <device>

glabel status

zpool create <pool-name> /dev/label/zfs1 /dev/label/zfs2 /dev/label/zfs3

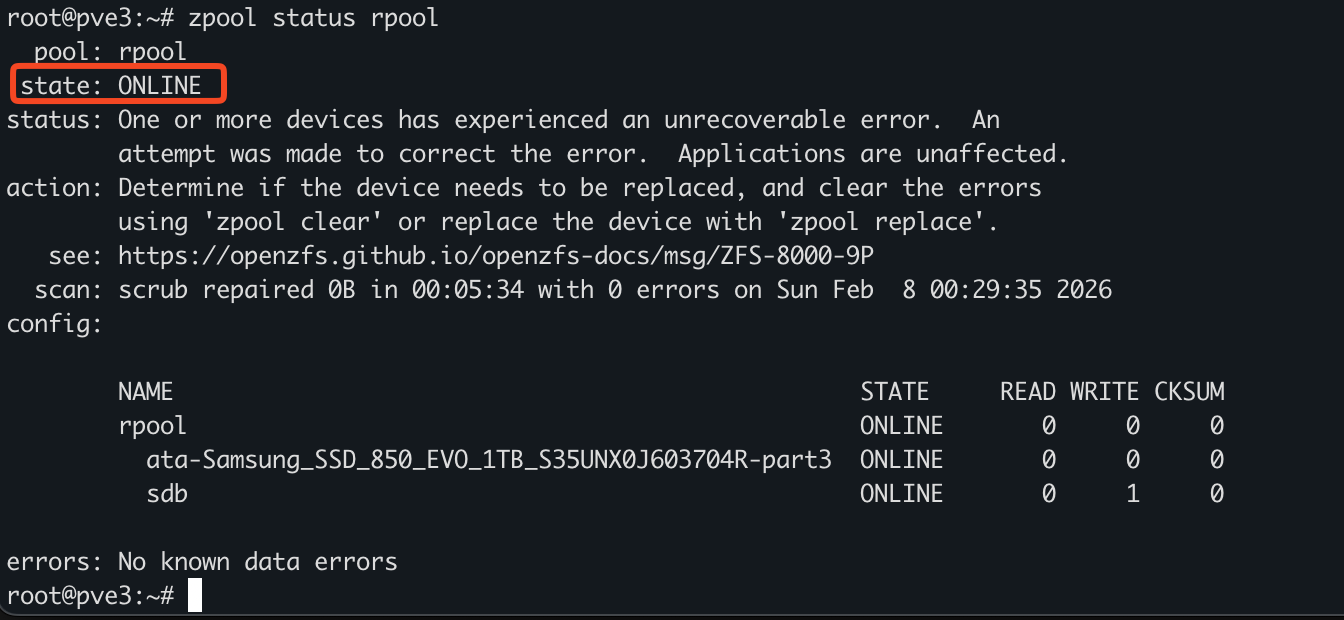

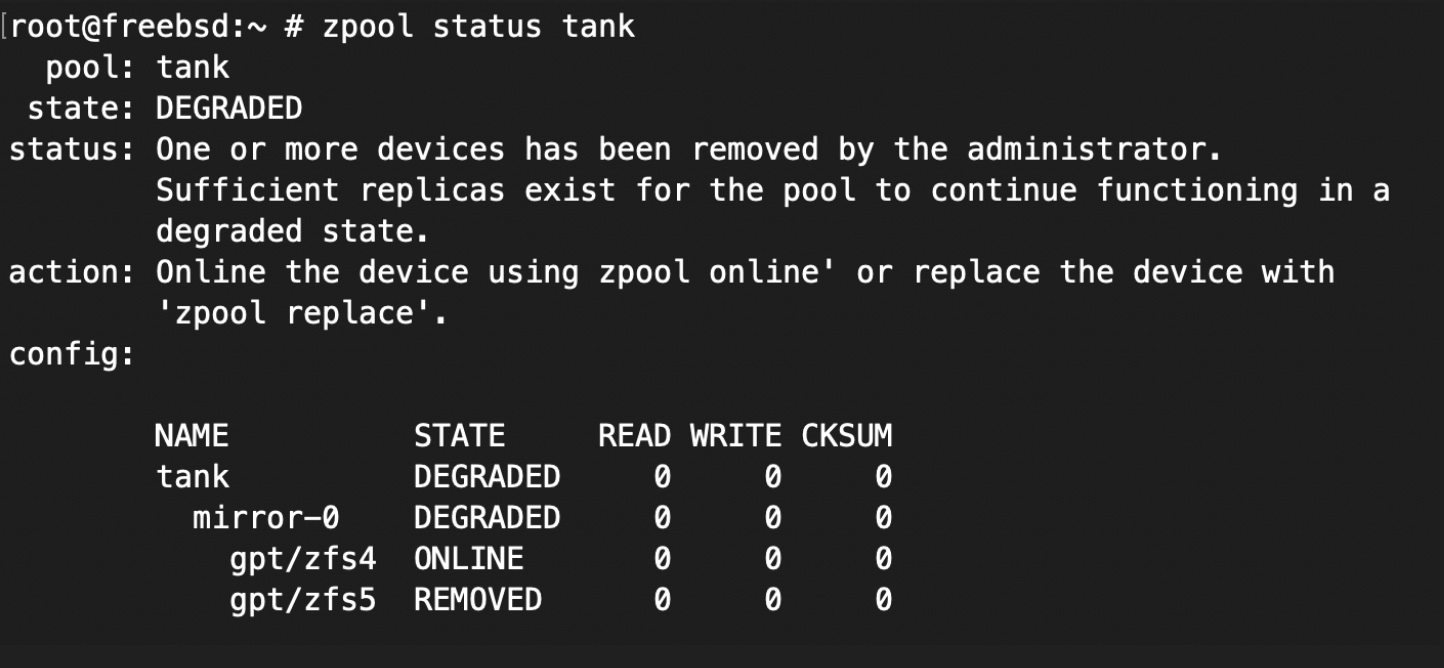

Fix Degraded Pool

- Here we see the state is

DEGRADED

- You can swap the degraded disk with a new one

- Remember that a pool is made by combining one or more vdevs; ZFS stripes data across the vdevs.

- If any vdev fails, the entire pool fails.

Tuning

- Remember, a pool splits all write requests between VDEVs in the pool.

- A single small file might only go to one VDEV, but in aggregate, writes are split between VDEVs.

- Using multiple VDEVs increases IOPS and throughput bandwidth.

Rolling Snapshot

# **zfs** **destroy** **-r** pool/users@7daysago

# **zfs** **rename** **-r** pool/users@6daysago @7daysago

# **zfs** **rename** **-r** pool/users@5daysago @6daysago

# **zfs** **rename** **-r** pool/users@4daysago @5daysago

# **zfs** **rename** **-r** pool/users@3daysago @4daysago

# **zfs** **rename** **-r** pool/users@2daysago @3daysago

# **zfs** **rename** **-r** pool/users@yesterday @2daysago

# **zfs** **rename** **-r** pool/users@today @yesterday

# **zfs** **snapshot** **-r** pool/users@today

ZPOOL History

Ref

- Lucas, Michael W; Jude, Allan. FreeBSD Mastery: ZFS (IT Mastery) (p. 65). (Function). Kindle Edition.